Google prompting essentials extended course

Estimated reading time: 10 minutes

I took Google’s prompting essentials course on Coursera, and this is the extended version of that course. It covers all the modules in greater depth and includes additional examples that were not part of the main course.

I Took Google’s 6 Hour Prompting Essentials Course Curated in 25 Minutes

Table of contents

Module 1: Mitigate hallucinations

Hallucinations are one of the biggest limitations of generative AI. They occur when the AI receives vague or unclear instructions, or when it produces output on topics it hasn’t been well trained on. To mitigate this, it’s important to fact-check the results and use clear, detailed prompts.

Fact-check AI outputs

Some “AI tools” have built-in fact-checking capabilities that provide sources for the information in their outputs. If this feature isn’t available, you can manually fact-check AI-generated content using a search engine to verify the information. Doing so helps eliminate hallucinations and ensures greater accuracy.

Use clearer more detailed language

A generative AI may also misunderstand a prompt if it contains incorrect information. For example, if you ask, “Why is Toronto the capital of Canada?” the AI might treat that incorrect assumption as true. Since Toronto is not the capital of Canada, this could lead the AI to generate an incorrect explanation and give a false history of Canada.

// connected block (ui/ux)

Pro tip

When in doubt, clear the memory

Clearing the memory of an AI tool is an effective way to improve accuracy and reduce hallucinations. This technique gives the tool a fresh start, allowing it to process new prompts with a clean slate and avoid potential harms such as:

- Bias: Prevents the tool from carrying forward assumptions or stereotypes from earlier prompts.

- Confusion: Ensures the tool focuses solely on the current task and context.

- Privacy risks: Removes potentially sensitive information from previous interactions.

- Troubleshooting: Refreshes the tool when it seems stuck or produces unexpected results.

Disclaimer when clearing memory: some AI tools automatically clear the memory each time you open it and therefore don’t remember what you previously asked.

// connected block (ui/ux)

Module 2: Prompt with personality

Ever noticed how some AI-generated text sounds a bit robotic? This often happens because generative AI relies on contextual understanding to interpret tone and style from your prompt. That’s why it’s important to learn strategies for adding tone and style to your prompts.

Strategies for adding tone and style in prompting

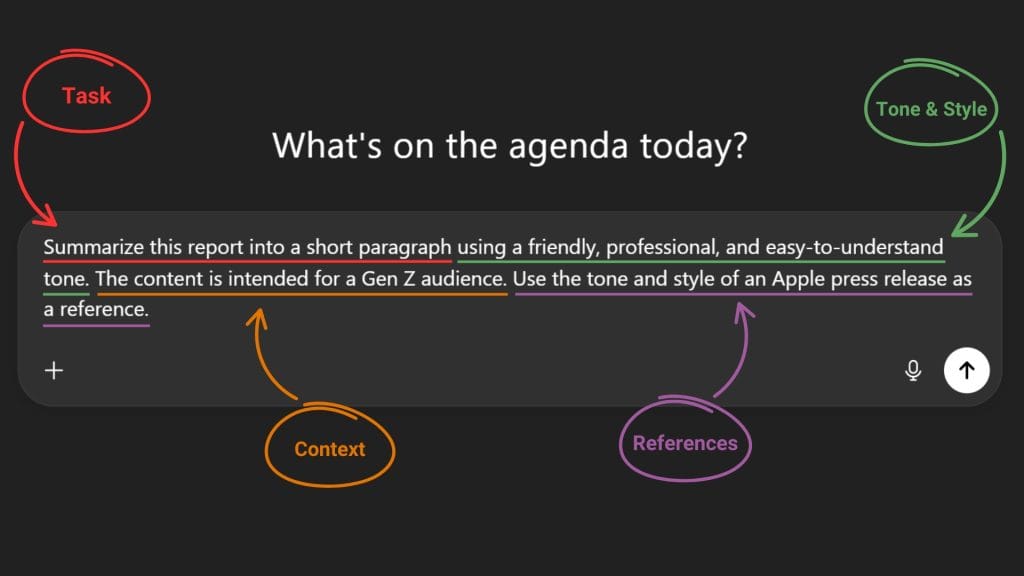

There are three strategies for adding tone and style to your prompts. These strategies are based on the prompting framework. Below are the strategies, each followed by an example.

Specify your tone and style

When prompting, start by using the prompting framework to define the task. Then, include your desired tone and style within that task.

For example, if you need to summarize a report, instead of simply writing:

“Summarize this report into a short paragraph.”

You can enhance it by adding tone and style, turning the basic prompt into:

“Summarize this report into a short paragraph in a friendly, professional, and easy-to-comprehend tone.”

Provide tone and style references

The second technique requires you to provide a “reference” in your prompt. Let’s take the example before and add a reference to it:

“Summarize this report into a short paragraph in a friendly, professional, and easy-to-understand tone. Consider the tone and style of an Apple press release as a reference.”

Iterate on tone and style

If the previous prompt produces an output that doesn’t feel quite right, the final technique called “iterating” can help bring you closer to your intended result. This involves you to “iterate” and in this case, add “context” to your prompt.

Suppose the report is intended for a Gen Z audience. Let’s take the previous example and iterate the prompt by adding this context:

“Summarize this report into a short paragraph using a friendly, professional, and easy-to-understand tone. The content is intended for a Gen Z audience. Use the tone and style of an Apple press release as a reference.”

Why prompting for tone and style matters

Being nuanced matters when generating AI outputs. The more specific you are with your prompts, the more personalized the results will be. So get specific with your prompts, and narrow them down to match the tone and style you want the output to sound.

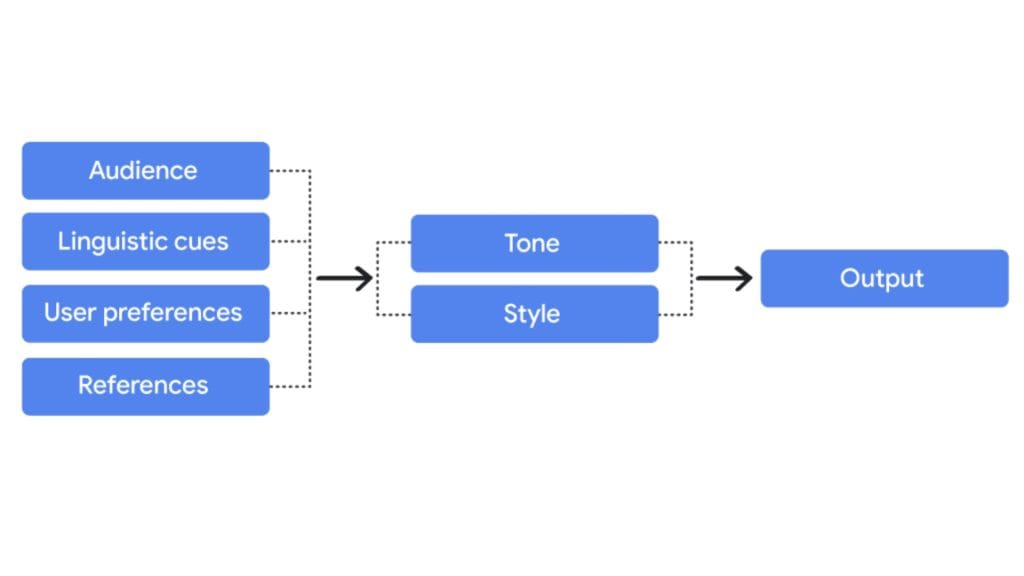

Contextual understanding

Generative AI chatbots are equipped with “contextual understanding” trained on a massive “training set” of everyday conversations. This process helps AI tools to:

- Identify audiences.

- Recognize linguistic cues, like a question mark at the end of a sentence.

- Adapt to your preferences.

- Learn language from provided references.

- Understand inputs and generate outputs in the tone and style you provide it.

- Stay consistent in the language it uses.

Consider asking an AI chatbot, “What’s up?” It understands that you’re likely asking how it’s doing. The AI recognizes this common greeting from its training data and may respond with something like, “Not much, how about you?”

This example demonstrates contextual understanding, a process that enables generative AI to interpret prompts based on their meaning and setting. It allows the AI to comprehend the request and generate an appropriate response.

Module 3: Fine-tune AI outputs by adjusting tool settings

Have you noticed that the same prompt can produce different results? That’s because probability plays a role in generative AI when determining what information to use in its output.

You can influence the likelihood of certain outputs by adjusting the settings known as “sampling parameters.” Modifying options like temperature and top-k or top-p sampling can help reduce hallucinations and generate more specific results.

Temperature

When AI generates text, it doesn’t consider every possible word or sentence. Instead, it samples from a range of likely options based on patterns learned from its training data. “Sampling parameters” allow you to expand or narrow the pool of options the AI chooses from when generating an output.

The sampling parameter that determines the probability of a word or sentence being selected from that pool is called “temperature.” Temperature controls how likely the AI is to choose common, familiar responses versus more niche or unexpected ones. A lower temperature leads to more predictable outputs, while a higher temperature allows for more creativity and randomness.

Temperature Sampling

Let’s picture a scenario where you’re telling a friend about what you did earlier this morning. A phrase that begins with “This morning, I went to the…” could lead in many different directions. Words like “grocery store” or “parking lot” are more likely to follow than less typical options like “haunted house” or “moon.”

To better illustrate this concept, let’s revisit the phrase: “This morning, I went to the…” This time, the generative AI produces a list of possible next words, each assigned with a probability based on how likely it is to appear.

Based on the illustration, the word “grocery store” has the highest probability (0.41), meaning the tool considers it the best fit to complete the phrase. By adjusting the temperature setting, you can control the level of randomness in how the AI selects words from the list of possibilities.

- “Low temperature” (e.g., 0.05) makes the AI strongly favor words with the highest probability. In this case, it would likely choose “grocery store.”

- “High temperature” (e.g., 2) adds more randomness and creativity to the response, making it more likely to choose a word with a lower probability, such as “haunted house.”

Top-k sampling

There are other sampling parameters that determine how many responses a generative AI can choose from. One of these is called top-k. The top-k value sets the number of tokens from which the AI selects its output, helping you get more precise and controlled results.

Setting the top-k value to a minimum of 1 guarantees the most likely result. This is known as greedy decoding. With this setting, the AI will always produce the same output for the same prompt, meaning the results will no longer be random but fully deterministic.

Top-p sampling

While the top-k value refines the selection pool by limiting it to a fixed number of tokens, top-p narrows it down based on cumulative probability. Each token has a probability between 0 and 1, representing the likelihood that the AI will select it in its response. The AI selects from the most likely tokens in descending order until the total of their probabilities reaches the specified top-p value.

By default, the top-p value is set to 1, meaning all possible tokens are considered and has no effect on sampling. A lower top-p value (e.g., 0.2) filters out less likely options, leading to more focused results. A higher top-p value (e.g., 0.9) allows the AI more freedom to explore lower-probability options, often resulting in more creative outputs.

Disclaimer: Setting high values for all three sampling parameters increases the randomness of the output and may lead to hallucinations. To reduce this risk, it’s recommended to use the “prompting framework” and “evaluate” the results.

Module 4: Meta-prompting

Have you ever struggled to write the most optimized prompts? Half the challenge of generating quality output from generative AI lies in crafting the right prompts. “Meta-prompting” is a technique where you ask an AI tool to generate prompts that can be used in your original prompt. You can also use this technique to improve existing prompts.

Meta-prompts fall into two categories: prompt generation and prompt refinement. Each category includes specific strategies made for different types of requests and intended outputs. Let’s start with the strategies for prompt generation, followed by those for prompt refinement.

Prompt generation strategies

In meta-prompting, prompt generation refers to when generative AI creates prompts from scratch. There are five different strategies, each with its own methods designed for different purposes.

Direct generation

Direct generation involves simply asking the AI to create a prompt for you. It’s the quickest method of meta-prompting and is often referred to as “automatic prompt engineering.” To get started, try asking the AI to generate a prompt based on your specific request.

“Generate a prompt that could help with [insert your AI request here].”

Template request

Template request relies on asking generative AI to create an outline that helps organize the content of your prompts. This type of meta-prompt typically starts with a phrase like “create a template,” followed by an outline structure and your specific request.

“Create a template specifying the most important elements of a generative AI prompt meant to [insert your AI request here].”

Image as reference

This method requires you to provide an image as a reference. The AI will then generate a prompt based on the image and any accompanying text. It can also be used to refine prompts you’ve previously tried.

Attach image into the prompt then write:

“Generate a prompt I can use to [insert your AI request here], using the attached image as a style reference.”

Text as reference

This method is similar to the image-as-reference approach, but instead of attaching an image, you attach files like PDF documents. The AI generates a prompt based on the files and any accompanying text, and it can also be used to refine existing prompts.

Upload files into the prompt then write:

“Using the attached files, generate a prompt to [insert your AI request here].”

Meta-prompt chaining

You’ve heard of “prompt chaining” before, and now it’s time to combine it with meta-prompting. This strategy is called meta-prompt chaining, and it involves breaking down the process of drafting a prompt into a series of sub-prompts.

“Generate a prompt that identifies the most important qualities of [insert your AI request here].”

After that, use the generated prompt to get a response from the AI. Once you’ve reviewed the output, continue prompting by asking the AI to generate follow-up prompts. This helps you expand on your content step by step and make the most of generative AI using the meta-prompt chaining strategy.

Prompt refinement strategies

Prompt refinement involves generating new prompts from already existing ones. This technique is used to fine-tune and improve prompts in order to produce better outputs. The course calls this “power-up strategies,” which include three approaches for leveling up your prompt game.

Leveling up

In this course, the term “leveling up” refers to asking generative AI to immediately improve your existing prompt. It’s similar to direct generation, where you ask the AI to create a prompt, but instead of starting from scratch, the AI enhances a given prompt to make it more effective.

Remixing

In meta-prompting, “remixing” means asking a generative AI tool to combine multiple prompts into a single prompt. The AI identifies the main points from each prompt and synthesizes them into one prompt that retains all the important information.

Style swap

You said: Last but not least is style swap. The term style swap in meta prompting means setting the mood and tone of a prompt to create a more vivid and expressive output. To use this technique, ask the AI to rewrite your original prompt with a focus on emotional depth, expressiveness, and passionate language.

Pro tip: You might want to adjust the sampling parameter settings such as temperature, top-k, and top-p to higher values to achieve more creative results. However, keep in mind that increasing these values may lead the AI to hallucinate.